Tools

Tools on the Abacus.AI platform are reusable components that enable integration with external systems and execution of custom code. They provide a structured way to extend platform capabilities and automate workflows.

Types of Tools

The Abacus.AI platform supports several types of tools:

- Python Function: Custom Python code tools for executing arbitrary logic

- Connector: Integration tools that allow you to connect to external platforms (e.g., Jira, Confluence)

Creating Tools

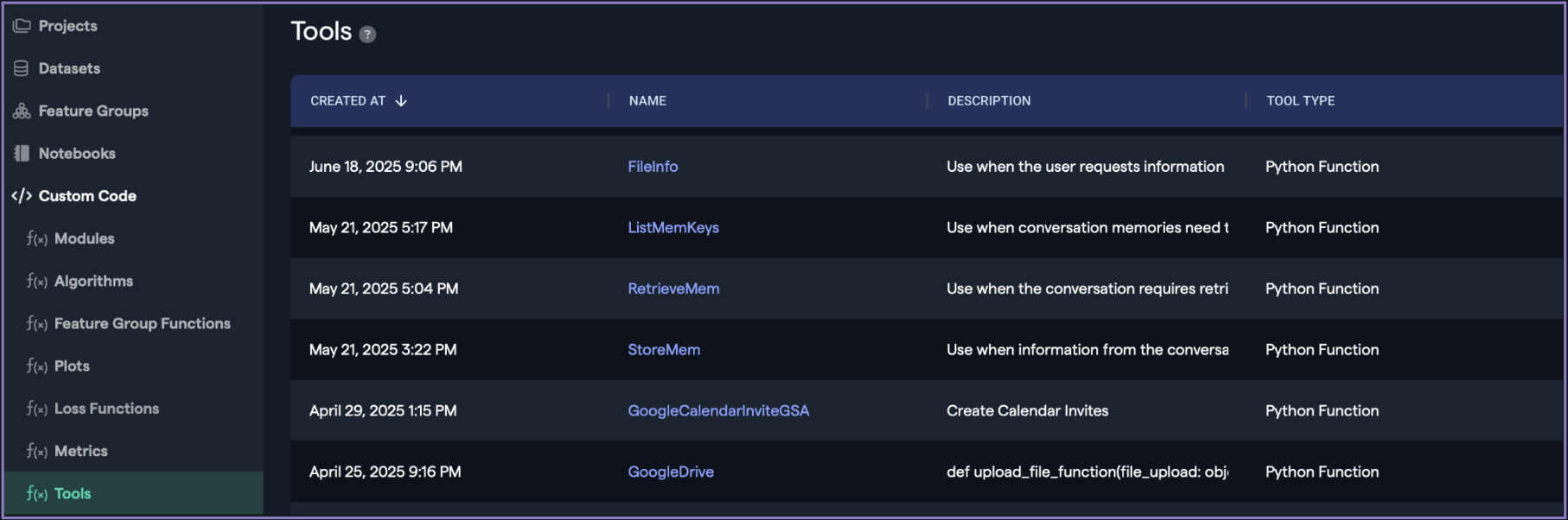

To create a new tool on the Abacus.AI platform:

- Navigate to Custom Code in the left navigation bar

Note: This is available outside of projects, so navigate to https://abacus.ai/app or access directly from this link

- Select Tools from the dropdown menu

- Click Create Tool in the top right corner

Tool Creation Process

-

Basic Configuration:

- Provide a name for the tool

- Select the tool type (Python Function or Connector)

-

Tool-Specific Configuration:

Python Function Tools can be created using two approaches:

- Write custom Python code directly

- Provide detailed instructions describing the tool's purpose, functionality, and invocation criteria

Connector Tools require:

- Selection of an existing platform connector (Jira or Confluence)

- Detailed instructions describing the tool's purpose, functionality, and invocation criteria

Implementation Details

When creating a custom tool, Abacus automatically generates a notebook with sample code to guide you through the process.

Standard Response Format: By default, tools return JSON output that the LLM can process after invocation.

Document Response Format: Tools can also return documents or files using the following notation (note: this differs from AI Workflows):

return {

'file_blob': abacusai.Blob(

contents=bytes_data, # bytes of file

mime_type=(

'application/vnd.openxmlformats-officedocument.'

'presentationml.presentation'

),

filename='my_document.pptx',

size=len(bytes_data)

)

}

For detailed examples of tool configurations and prompting best practices, see the Tool Examples page.

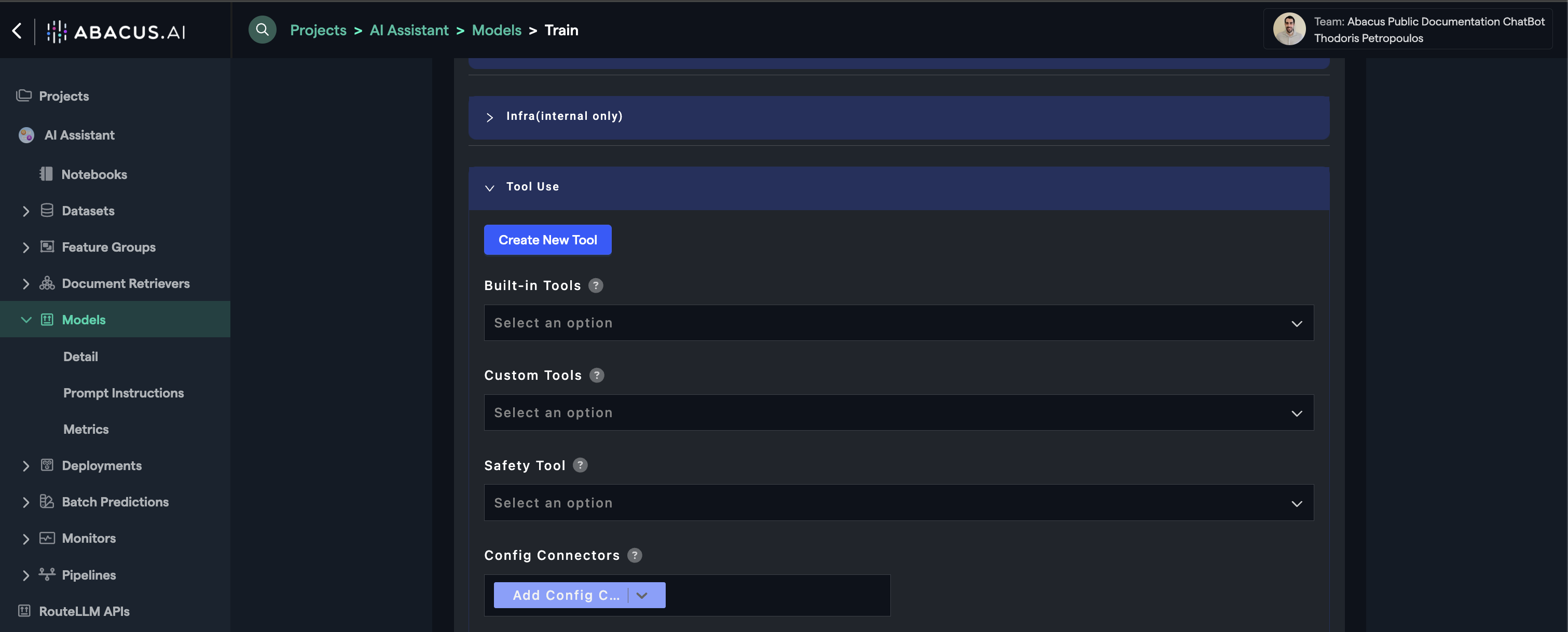

Adding Tools to Chatbots

After you have created a tool, you can add it to any custom Chatbot using the model training options:

The tool will now be used by the LLM based on the prompting you have provided.

Finally, please note we offer many built-in user connectors / Tools that you can utilize for custom Chatbots. Once you have configured your Chatbot with one of these, it will be able to utilize the individual's access credentials to query data on the fly from multiple systems such as:

- Google Drive

- OneDrive

- SharePoint

- Salesforce

- Gmail

- Outlook

- And others

Safety Tool

We have added a new safety tool feature that supports creating custom prompt evaluations that block or allow messages to the underlying chatbot. This allows deploying organization-defined rules for permissible content within user messages.

Note: This feature is only available for custom chatbots built through a ChatLLM project.

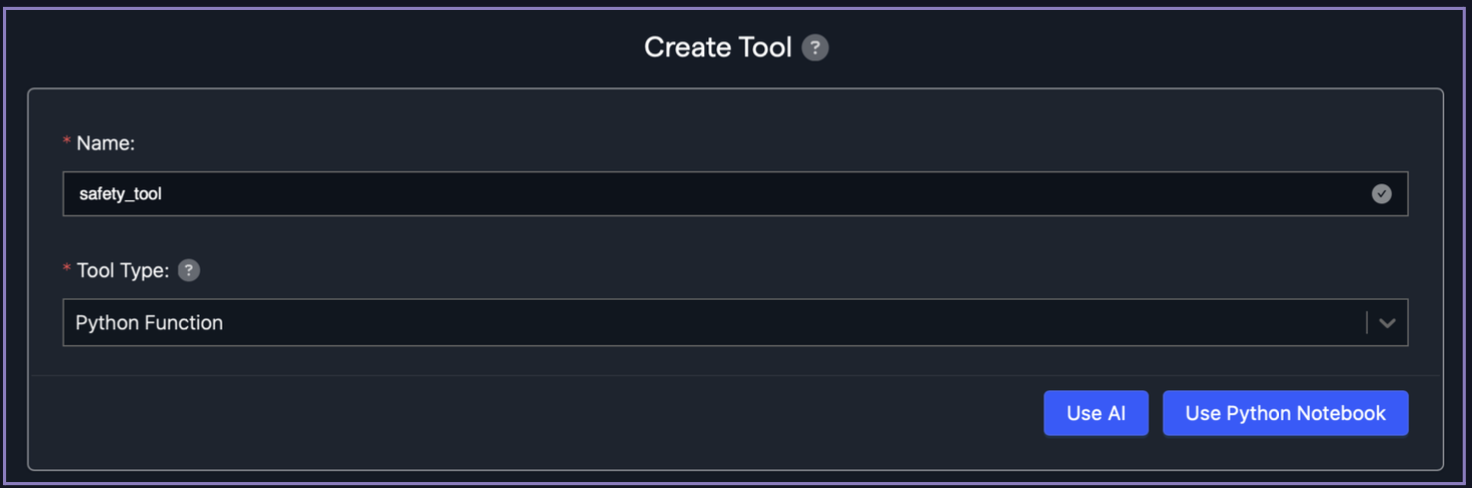

Creating a Safety Tool

Safety Tools can be created in the same way as other custom tools. Navigate to the Abacus home page, then in the left-hand menu select Custom Code >> Tools.

Make sure the tool type is Python Function and then build the tool either using the AI-assisted approach or directly with Python.

Note: When building the tool, the input should be a list of messages and the evaluation process should return either {'SUCCESS': True} to allow messages or {'SUCCESS': False} to block them.

Here is a simple example that looks for a keyword, "abacus", and then blocks the message to the chatbot if it is found:

def safety_tool(messages):

# Get last message

message = messages[-1]["text"]

# Main logic: check presence of 'abacus' keyword

if "abacus" in message.lower():

return {'SUCCESS': False, 'UNKNOWN_ANSWER': 'custom message'} # Do not pass message to chatbot

else:

return {'SUCCESS': True} # Let chatbot respond

To add the tool, use the new Safety Tool option in the ChatLLM configuration screen.

With the safety tool deployed on a custom chatbot, each time a user asks a question the tool will evaluate if the prompt is "safe" to pass to the underlying chatbot.